TensorRT-LLM

Overview

Jan uses TensorRT-LLM as an optional engine for faster inference on NVIDIA GPUs. This engine uses Cortex-TensorRT-LLM (opens in a new tab), which includes an efficient C++ server that executes the TRT-LLM C++ runtime (opens in a new tab) natively. It also includes features and performance improvements like OpenAI compatibility, tokenizer improvements, and queues.

Currently only available for Windows users, Linux support is coming soon!

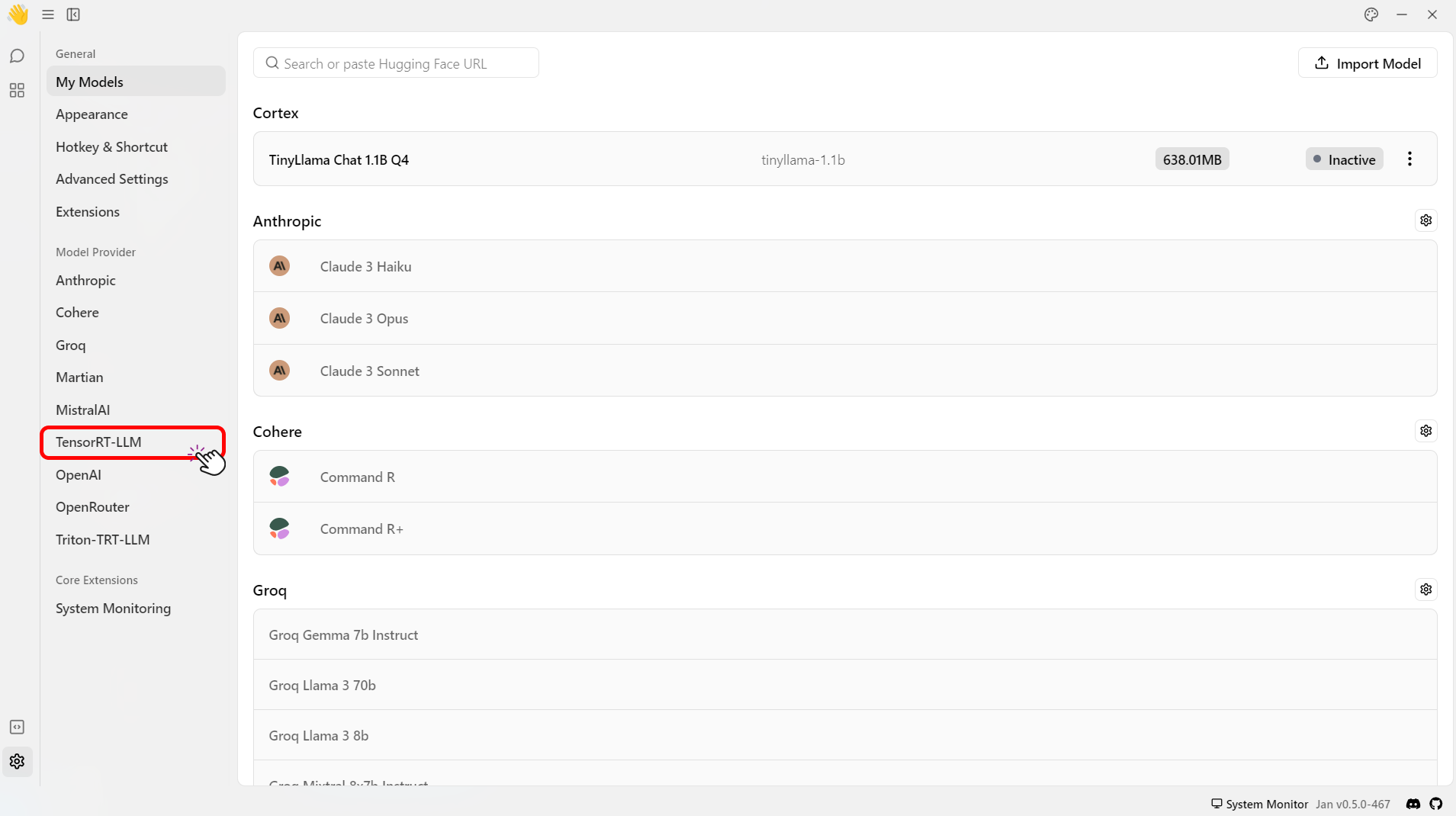

You can find its settings in Settings () > Local Engine > TensorRT-LLM:

Requirements

- NVIDIA GPU with Compute Capability 7.0 or higher (RTX 20xx series and above)

- Minimum 8GB VRAM (16GB+ recommended for larger models)

- Updated NVIDIA drivers

- CUDA Toolkit 11.8 or newer

For detailed setup guide, please visit Windows.

Engine Version and Updates

- Engine Version: View current version of TensorRT-LLM engine

- Check Updates: Verify if a newer version is available & install available updates when it's available

Available Backends

TensorRT-LLM is specifically designed for NVIDIA GPUs. Available backends include:

Windows

win-cuda: For NVIDIA GPUs with CUDA support

TensorRT-LLM requires an NVIDIA GPU with CUDA support. It is not compatible with other GPU types or CPU-only systems.

Enable TensorRT-LLM

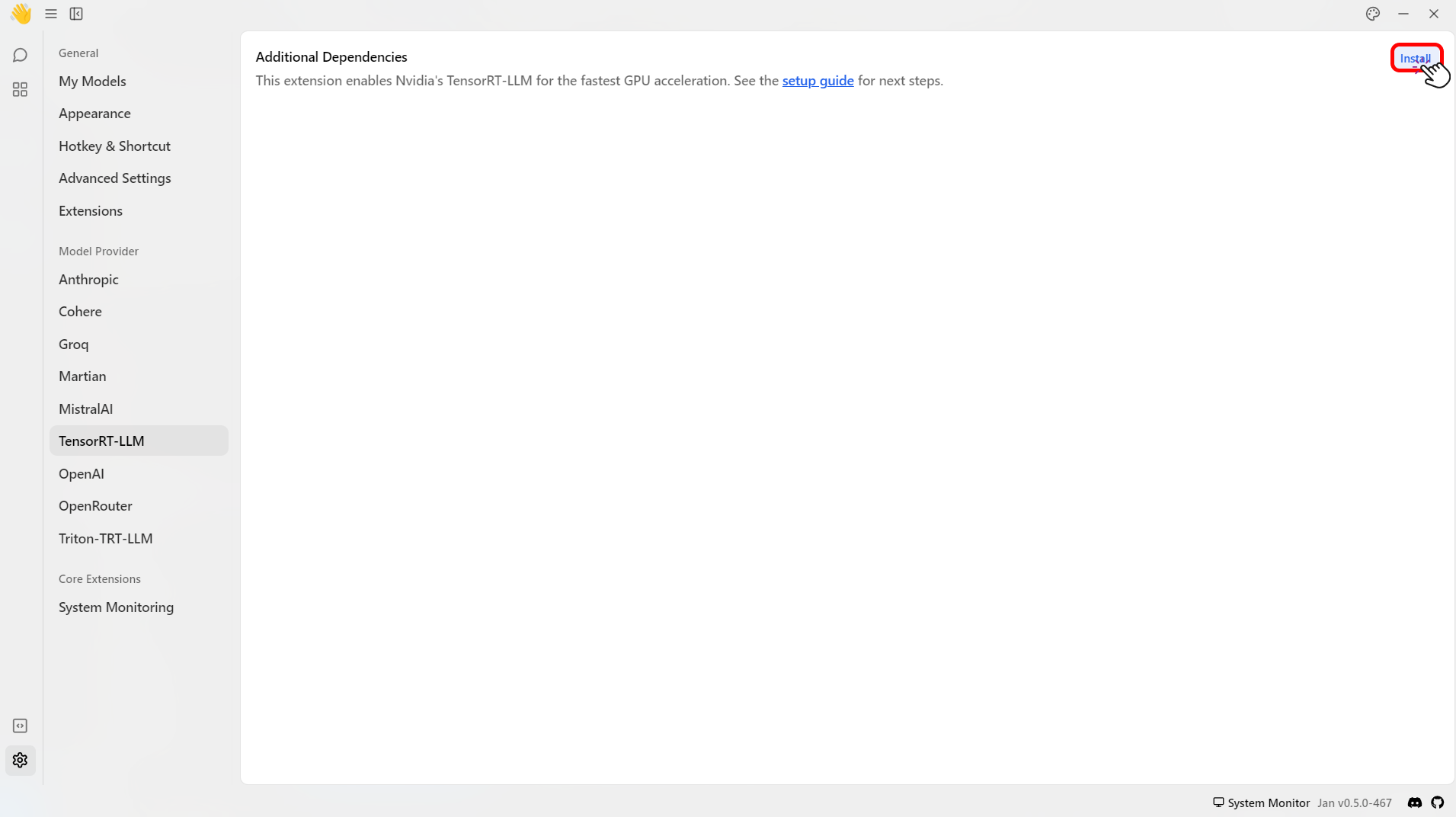

Step 1: Install TensorRT-Extension

- Click the Gear Icon (⚙️) on the bottom left of your screen.

- Select the TensorRT-LLM under the Model Provider section.

- Click Install to install the required dependencies to use TensorRT-LLM.

- Check that files are correctly downloaded.

ls ~/jan/data/extensions/@janhq/tensorrt-llm-extension/dist/bin# Your Extension Folder should now include `nitro.exe`, among other artifacts needed to run TRT-LLM

Step 2: Download a Compatible Model

TensorRT-LLM can only run models in TensorRT format. These models, aka "TensorRT Engines", are prebuilt for each target OS+GPU architecture.

We offer a handful of precompiled models for Ampere and Ada cards that you can immediately download and play with:

- Restart the application and go to the Hub.

- Look for models with the

TensorRT-LLMlabel in the recommended models list > Click Download.

This step might take some time. 🙏

- Click Download to download the model.